Why AI agents are getting better

And the implications for SaaS leaders building agentic applications

On Jan 23rd, 2025, OpenAI announced its first agentic product, Operator. A mere 9 days later on Feb 3rd, they launched their second agentic product, Deep Research. 19 days later, Anthropic released the first hybrid reasoning model Claude 3.7 Sonnet and their first agentic product, Claude Code, in preview.

These are significant events in the “AI Agent” timeline. If you have been generally online, you would think from the many announcements over the past year that “billions” of long-running, useful, AI agents are already being used by customers everywhere. That’s not quite true. Customer support and coding were probably the only two use cases with some very early, limited agentic success until now.

As this industry somewhat matures, we need to distinguish between LLM workflows and AI agents. Here’s how Anthropic describes the difference (paraphrased):

At Anthropic, we draw an important architectural distinction between AI workflows and agents: Workflows are systems where LLMs and tools are orchestrated through predefined code paths.

Agents, on the other hand, are fully autonomous systems with memory where LLMs dynamically direct their own processes and tool usage over extended periods if necessary, maintaining control over how they accomplish tasks.

No surprise then that the leading AI labs only announced their first agentic products this year. The breakthrough in inference-time compute scaling combined with “tool-use RL” is making it possible to train more reliable, intelligent agents that don’t compound but correct errors as they compute.

Just a few months ago, no one outside the leading AI labs had access to these models at all. Now, almost everyone does - kind of. In this post, we dig into exactly how these agents were built and the considerable implications for SaaS developers.

Reasoning models w/ open compute budgets

Agentic products getting widespread adoption are built on reasoning models. OpenAI’s o1 was the first “slow thinking” model, released in Dec 2024 - just 3 months ago. Moreover, agentic products like Deep Research undergo supervised fine-tuning (SFT) to optimize their outputs for specific problems. You and I can’t replicate the Deep Research product by prompting o1 to think more. Finetuning and post-training OpenAI’s reasoning models continue to be very expensive.

This is where open source reasoning models like DeepSeek R1 and Alibaba’s Qwen come in. Their performance is competitive with o3-mini, o1, Sonnet 3.7 etc; they are cheap and you can post-train them and even deploy them on-premise as a large company that wants to experiment securely.

Turns out that post-training is crucial. This is where RL - a traditional supervised machine learning technique has come back into vogue and is pretty much considered the road to AGI by believers.

End-to-end training: RL with Tool Use

Agents like Operator, Deep Research and Calude Code have been trained on their tasks end-to-end using reinforcement learning (RL) in a complex environment where the fine-tuned models use tools during the training process.

How Deep Research works

Deep research was trained using end-to-end reinforcement learning on hard browsing and reasoning tasks across a range of domains. Through that training, it learned to plan and execute a multi-step trajectory to find the data it needs, backtracking and reacting to real-time information where necessary. The model is also able to browse over user uploaded files, plot and iterate on graphs using the python tool, embed both generated graphs and images from websites in its responses, and cite specific sentences or passages from its sources.

Much of software relies on interoperable systems. Today, human beings patch together workflows that are not integrated well together. Agentic tool use is an incredibly important aspect of how software can address this gap in the future.

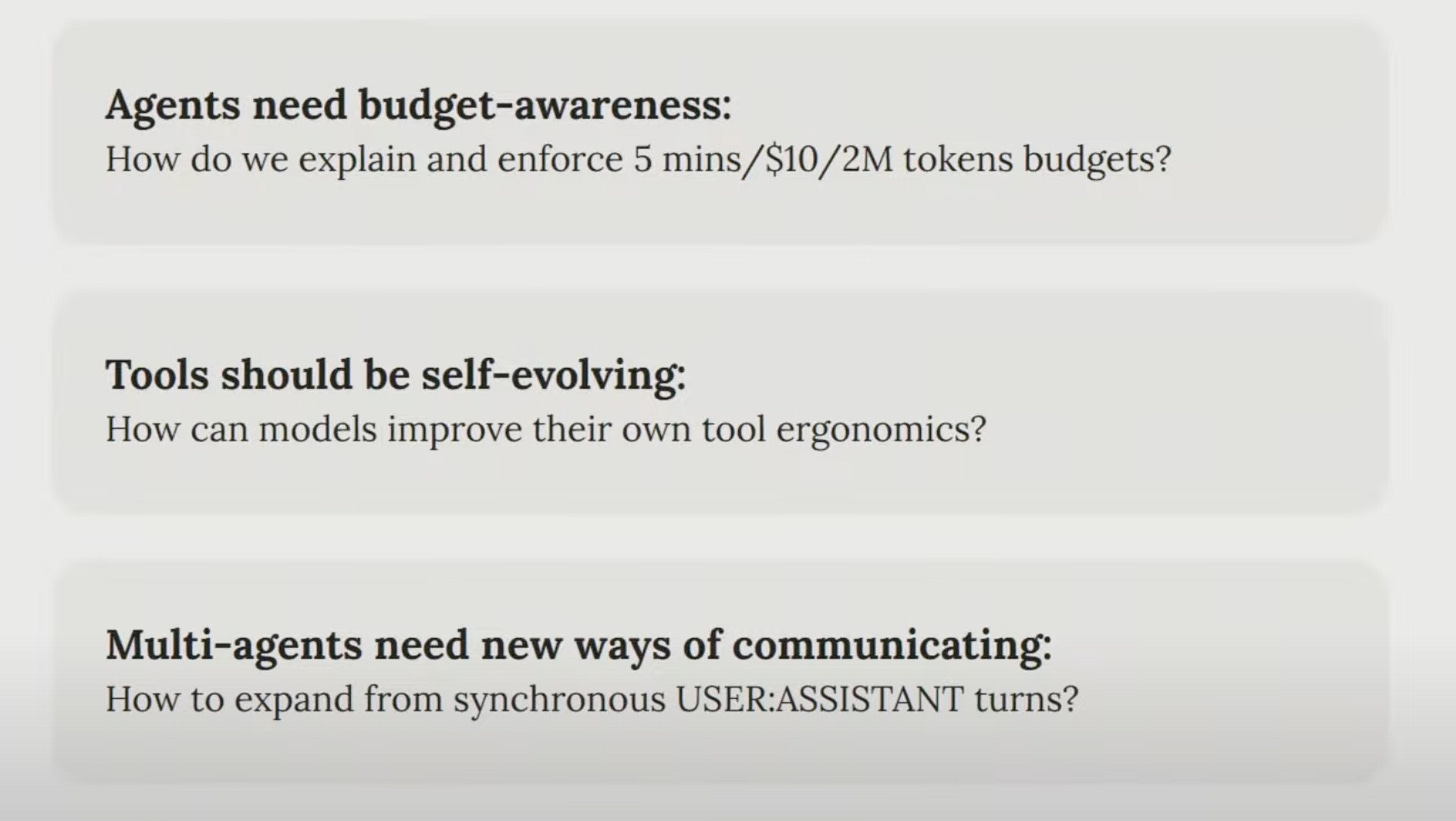

Barry Zhang from Anthropic, in this excellent talk on building effective agents at the AI Engineer Summit in NY last Feb, talks about his personal musings on what else agents will need to get better.

Systems engineering to support stochastic software

I am told that building agentic software feels.. weird. Typically, a team working on a new product focuses a lot on front-end/product engineering. They also invest heavily in crafting the core logic and workflow orchestration. Their approach to UX might not need to be innovative. They don’t worry too much about systems and QA until the business reaches some scale.

When building agentic software, this is no longer the case. The LLM does a lot of heavy lifting in terms of logic/orchestration. In the future, it might also generate user interfaces and adapt front-ends to the problem at-hand. So what do the engineers focus on?

The answers seem to be UX and reliability. Much of the success of early agent startups like Cursor comes down to getting the UX just right for where the model capability currently is. Customer support startup Sierra has invested heavily in their system engineering (testing, release management, QA) to ensure their customer support agents work - as opposed to traditional software startups that might invest more in product engineering. They run anywhere between hundreds to thousands of tests before every new version of each customer’s unique agent is released. The system is their IP - not the model or the product interface.

Open standards supported by established AI Labs

Tool use saw an interesting development last week with multiple coding agent startups like Cursor embracing Anthropic’s Model Context Protocol (MCP) - a unified API standard for LLMs that makes it easier for agentic applications to access and act on data in other systems. This didn’t make news because it was a technical breakthrough, but because in some sense a new standard had emerged and the right people had embraced it over alternatives like LangGraph/OpenAPI.

This won’t be the last standard needed for agent interoperatbility. MCP in particular is a stateful protocol which means it doesn’t scale well over millions of simultaneous user interactions. However, it does make agentic tool use in applications much easier to experiment and test.

Implications for SaaS founders building agentic products

How should Saas founders approach this moment? If you are an independent developer building an AI agent product, how do you future-proof your architecture? Will simply deploying SoTA models with prompt engineering to complete the workflow be the best approach? Or will a competitor investing in SFT and training a model end to end with tool-use RL achieve significantly better results?

The answer seems to be twofold - first, it depends on your use case and second, embrace experimentation. For use cases that SoTa models have already been heavily post-trained on - conversations, coding, etc - agentic systems need the least additional training investments. You might still want to build on open source for reasons of cost, security, etc.

For more novel use cases, it’s clear that simply using SoTa models out of the box won’t give you the most compelling agent products. You need to be able to train them to use the right tools and regulate the amount of inference budget they have to get solutions to open-ended problems. You might even need to try emerging new architectures like Agentic Pretrained Transformers or APT by Scaled Cognition.

This experimentation is where startups have a massive advantage over SaaS incumbents right now. A great startup engineering team, iterating weekly, can ride the exponential and learn 100X faster. However, you must also pick customers and market segments (see my earlier post on bad markets being good for AI agents) that are looking for novel solutions and are desperate to try things that don’t necessarily work all the time, yet.

Many SaaS incumbents, especially those selling to enterprises, have the disadvantage that their customers might not be early adopters of autonomous agents. They are not necessarily going to ask them for this or be the first to adopt them when given the option. This slows down their pace of learning and is the primary reason why you see incumbents lagging behind startups in AI agents despite investing resources and having data + distribution advantages.

Of course, the question of autonomy is a big one. While autonomous AI agents should proliferate in use cases where there are clear, verifiable, correct outcomes; that’s not really most of knowledge work. Fuzzy reasoning dominates knowledge work with no code to compile as a test. These use cases are likely to continue having semi-autonomous agents that work as collaborators with us.

AI Labs: Competitors or Enablers?

To wrap this all up, it’s fascinating to see both OpenAI and Anthropic act as enablers and competitors in the emerging agent ecosystem. It is reminiscent of the personal computing revolution, where companies like Microsoft were building both operating systems and applications - enabling and competing with developers everywhere.

OpenAI and Anthropic are both investing in their own agentic products for significant use cases like coding, research, personal assistance, etc while also supporting agentic SaaS companies that offer (much) better UX alternatives to their customers today at very low price points. It will be fascinating to see which parts of the stack (model/middleware/product) different companies end up dominating.

Great post Sandhya. While AI agents are getting better, what class of problems should be solved via "AI Agents" vs "LLM Workflows"? I believe, this question is critical to answer to understand how AI adoption will happen in the enterprise.